The Art of Observable Anomalies: Reading Ad-Tech Location Data Like an OSINT Pro

By Jeffrey Mader

November 2, 2025

Commercially available information (CAI) that looks like “cell-phone geolocation” is everywhere now; SDK pings from mobile apps, bidstream coordinates from ad auctions, and vendor-inferred “visits” to places of interest. It’s incredibly tempting to treat those dots on the map like GPS gospel. Don’t. If you work in OSINT, the right mindset is closer to probabilistic telemetry: useful, yes, but only after you’ve scrubbed out the artifacts that most often lead analysts astray.

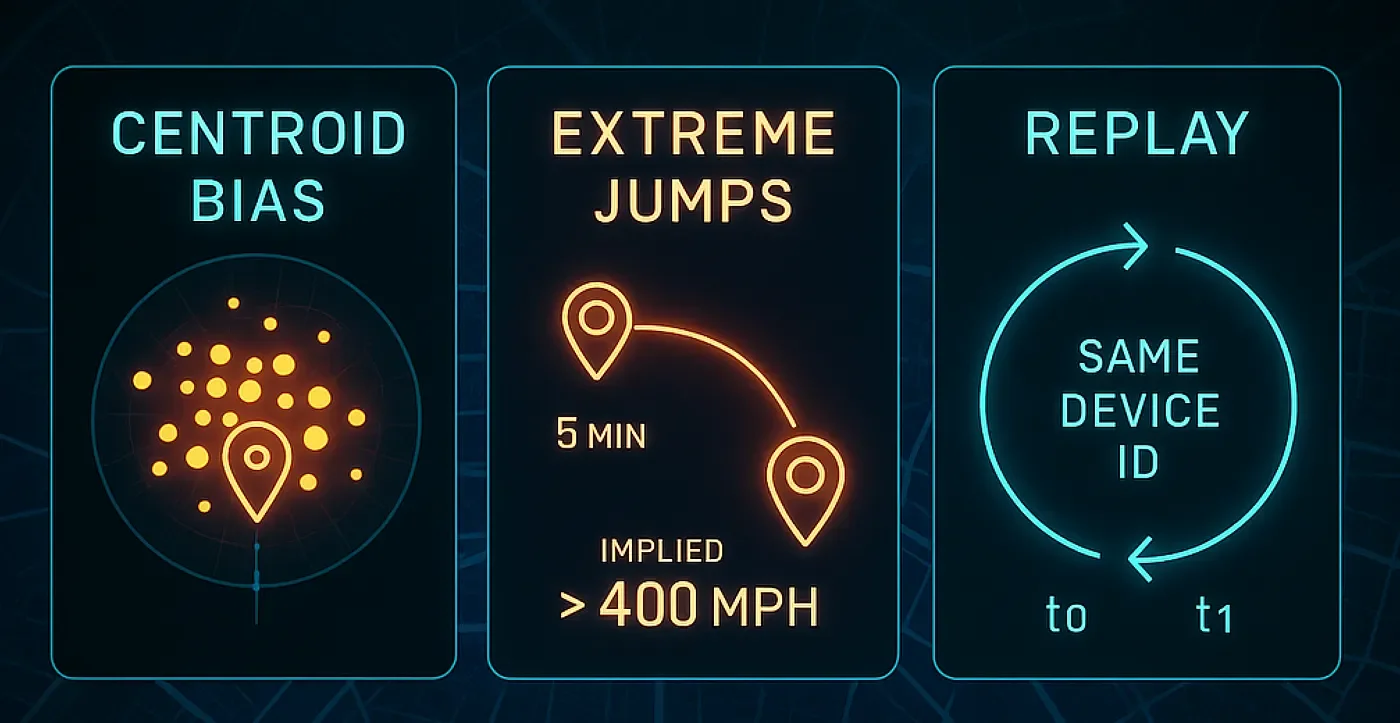

Let’s talk about the big three: centroid bias, extreme time–distance jumps, and replay data. Along the way, I’ll offer practical tells and fixes you can fold into your pipeline, but in plain language — no need to wade through a thicket of bullets to get the point.

What you’re actually looking at

Most “location” in ad-tech is a cocktail. Some rows are true GNSS (GPS) readings; others are Wi-Fi or cell-ID triangulation; a surprising number are IP-based inferences; and many are “snapped” by vendors to a venue polygon or a grid to make downstream analytics easier. When the system falls back to IP or polygon defaults, you start to see patterns that feel a little too neat: centers of cities lighting up like bonfires, parks with suspiciously steady “foot traffic,” and lattices of points aligned along clean decimals. These are not crowds of invisible people. They’re the map’s idea of where people might be when the source data is weak (Poese et al. 2011; MaxMind 2023; Feamster et al. 2021).

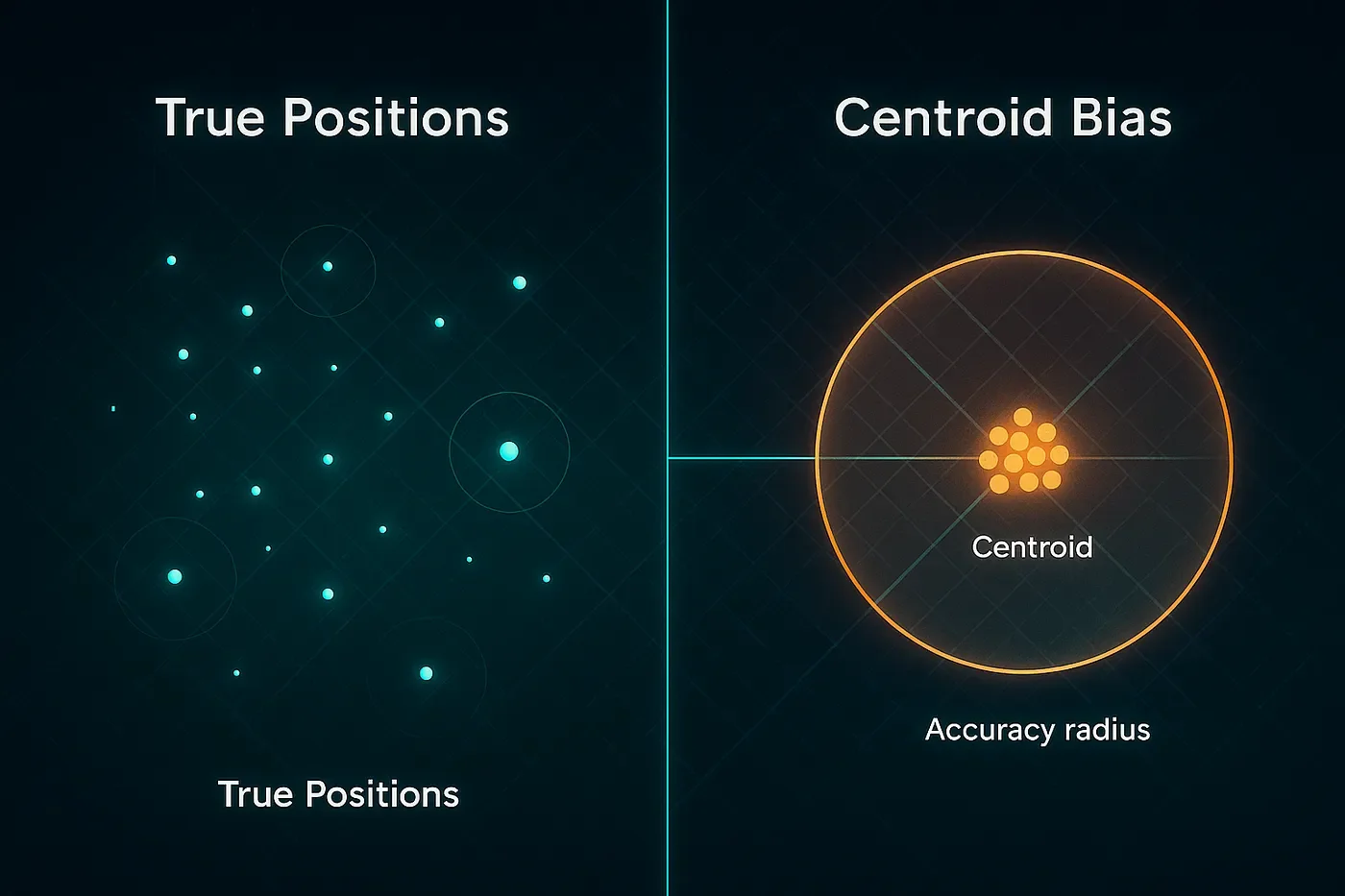

Centroid bias: when “the middle” masquerades as reality

Centroid bias happens when a provider assigns the geometric center of some area (country, state, city, ZIP, or even a store polygon) because it can’t place the device precisely. A famous cautionary tale is the Kansas farm MaxMind once used as a U.S. default: hundreds of millions of IPs were mapped to a single rural property, with real-world consequences for the people who lived there (Hill 2016). That story sticks with analysts for a reason: it’s memorable, and it exposes how a neat-looking dot can be a total guess.

You’ll see a smaller, quieter version of the same effect in places like Middleburg, Virginia. It’s plausible for repeated IPs or mobile provider sub-blocks to resolve to the town center when precision drops, making the middle of Middleburg look like a hotspot. If you’ve ever found a tidy cluster at roughly 38.969 N, −77.735 W and thought, “That’s oddly consistent,” you probably caught a default, not a dinner party waiting in the middle of an intersection for their reservation at the Red Fox Inn (Feamster et al. 2021; MaxMind 2023).

The everyday tell is repetition with suspicious neatness: identical coordinates used by lots of devices, coordinates ending in .0000 or .5000, or venue attribution that keeps crowning tiny storefronts while big neighbors look empty. When you see that, don’t over-interpret the dot. Ask first: is this really a person, or is it the centroid standing in for uncertainty? The fix is algorithmic common sense: down-weight or exclude low-precision sources (especially IP/carrier-derived points) for venue-level questions; insist on accuracy thresholds (e.g., under 100–150 meters for visit inference); and attribute “visits” with dwell-time and polygon overlap rather than snapping to the nearest centroid (MaxMind 2023; Feamster et al. 2021; Poese et al. 2011). And yes, it helps to keep a small watchlist of known defaults — Stijn de Witt’s catalog of MaxMind’s country fallbacks is a handy seed (de Witt 2016).

Extreme time–distance jumps: when physics raises its hand

Another red flag is the “teleport.” A device appears in Midtown and, five minutes later, in Milwaukee. On ground vehicles, implied speeds suddenly look like commercial jets. Usually this isn’t a spy novel, it’s caching, batching, misordered events, or a stream that delivered late and got sorted by receipt time instead of event time. Sometimes it’s spoofing; often it’s plumbing.

Your best friend here is a simple physics check. Compute speed and acceleration between consecutive pings and chop the track where it gets absurd. Sort by the time the event actually happened, not when your system ingested it. If many devices jump to the exact same coordinates at the exact same second, you may be staring at centroid fallout or a copy-paste anomaly rather than a flash mob. The remedy is dull but effective: enforce a maximum “age” for what you treat as near-real-time, segment tracks that violate speed/acceleration bounds, and log which suppliers are correlated with the weirdness so you can push quality conversations to the right place.

Replay data: yesterday’s pings in today’s clothes

“Replay” is the ad-tech world’s term for old pings that get re-emitted as new. The pattern is simple enough: same device ID, same coordinates, a fresh timestamp, maybe with a tiny tweak to the time or a millimeter of coordinate “salt” to

sneak past naive de-dupe. Why it happens is equally mundane: multi-hop reselling incentivized by volume, SDK spoofing, and the usual bag of mobile-ad fraud tricks (Unacast 2025; Adjust 2021; AppsFlyer n.d.; Wikipedia 2025).

If your analysis cares about when and where, replay is an invisible tripwire. The tells are rhythmic: identical pairs of device and location resurfacing at strangely regular intervals; near-perfect copies of yesterday’s path with minute-level shifts; sudden spikes tied to a single supplier or an oddball identifier version. The countermeasures are procedural. Treat event timestamps as truth and set a firm staleness window. Create a lightweight fingerprint (device, time bucket, rounded lat/lon, plus source) and drop near-duplicates. Favor first-party app sources under direct contract rather than multi-hop aggregates. And when a partner’s replay rate drifts up, quarantine the stream before it poisons your downstream logic (Unacast 2025; Adjust 2021).

One more wrinkle: some products intentionally do historical retargeting; they aim ads at devices known to be inside a polygon days or weeks ago. That’s fine for marketing, but catastrophic if you inhale it as “live telemetry.” If a stream is historical by design, label it as such at ingestion and keep it out of your real-time dashboards.

Art and science (and discipline)

Even with clean math, good judgment is non-negotiable. OSINT teams do better when they treat CAI as a probability field with provenance, not a ground-truth breadcrumb trail. That means asking basic questions up front: Which sensors are we seeing? What’s the accuracy radius? How old is this event? How many aggregator hops did it take to get here? Analysts who make those questions routine produce assessments that age well because they know where their confidence should, and shouldn’t, live (Poese et al. 2011; Feamster et al. 2021).

A word on compliance and reputational risk

Location trails are sensitive. Before you ingest, make sure you actually have a legal basis to do what you’re doing, and put retention and minimization rules in writing. If you operate under GDPR, CPRA, or similar, pay attention to the definitions around “sales/sharing” of geolocation and cross-context behavioral data. The press (and regulators) have repeatedly highlighted sensitive-site tracking in ad-tech data; provenance controls and purpose limitation aren’t optional window dressing, they’re part of not becoming the story (see Unacast 2025 for practical hygiene, and treat it as a floor, not a ceiling).

A practical closing thought

If your map lights up at a park’s center or a small town’s centroid, assume uncertainty before activity. If your tracks outrun physics, assume plumbing before tradecraft. And if a device seems to relive the same day over and over again, assume replay before routine. CAI can absolutely support OSINT, just make sure you do the cleaning before you do the concluding.

Here is a simple framework and abbreviated version of the framework to reference while you work with location data.

Simple Framework for identifying anomalies.

Provenance & schema

Normalize source, sensor, accuracy_radius, event_time, ingest_time, device_id. Keep lineage, downstream decisions depend on it.Row-level gates

Reject missing timestamps, invalid lat/lon, or accuracy > threshold for your use case.Track sanity

Compute speed/accel between consecutive pings; segment or discard outliers.Centroid checks

Maintain a table of admin/ZIP/POI centroids (seed with de Witt 2016 and your observed clusters).Staleness & replay filters

Enforce max age; dedupe with composite keys; alert on burst-coincidence (many devices at one coordinate/second).Human-in-the-loop

Audits of tiles with high failure rates (e.g., stadiums, airports, known centroids). Review samples per vendor.

Abbreviated version of the framework.

Source & accuracy present? If IP/carrier-derived or accuracy >150 m, down-weight for venue analysis.

Age within SLA? If not, mark stale and separate.

De-dupe by (device, time bucket, rounded lat/lon, source).

Physics checks pass (no teleports, no 900 km/h on city streets).

Centroid watchlist spike? Treat as unknown until corroborated.

Supplier hygiene: first-party preferred; monitor replay rates.

References

Adjust. 2021. “What Is Mobile Ad Fraud?” Accessed November 2, 2025. https://www.adjust.com/glossary/mobile-ad-fraud/.

AppsFlyer. n.d. “What Is SDK Spoofing?” Accessed November 2, 2025. https://www.appsflyer.com/glossary/sdk-spoofing/.

de Witt, Stijn. 2016. “Default Locations per Country for MaxMind.” Accessed November 2, 2025. https://stijndewitt.com/2016/05/26/default-locations-per-country-for-maxmind/.

Feamster, Nick, et al. 2021. GPS-Based Geolocation of IP Addresses. University of Chicago Internet Equity Initiative. Accessed November 2, 2025. https://internetequity.uchicago.edu/wp-content/uploads/2021/10/GPS-Based-Geolocation.pdf.

Hill, Kashmir. 2016. “How an Internet Mapping Glitch Turned a Kansas Farm into a Digital Hell.” Gizmodo, August 9, 2016. Accessed November 2, 2025. https://gizmodo.com/the-internet-mapped-hundreds-of-millions-of-ip-address-1784494744.

MaxMind Support. 2023. “Why Are Some IP Addresses Located in the Center of the Country?” Accessed November 2, 2025. https://support.maxmind.com/hc/en-us/articles/4407645686811.

Poese, Ingmar, et al. 2011. “IP Geolocation Databases: Unreliable?” ACM SIGCOMM Computer Communication Review 41 (2): 53–56. https://dl.acm.org/doi/10.1145/1925861.1925865.

Unacast. 2025. “How to Spot Bad Location Data Before It Breaks Your Product.” June 17, 2025. Accessed November 2, 2025. https://www.unacast.com/post/spot-bad-location-data-before-breaks-product.

Wikipedia. 2025. “Replay Attack.” Last modified August 31, 2025. Accessed November 2, 2025. https://en.wikipedia.org/wiki/Replay_attack.